AI Agent Evolution and Key ChallengesAI agent platforms have rapidly evolved from simple text generators to sophisticated systems capable of reasoning, planning, and taking action. The ability to connect LLMs with external tools and data has transformed what’s possible with AI, enabling more robust applications that can assist humans in increasingly complex tasks.

The integration of AI models with external systems isn’t just a nice-to-have feature—it’s becoming essential for any serious AI implementation. Modern systems need to seamlessly blend the reasoning capabilities of LLMs with access to up-to-date information, specialized tools, and company-specific data. Without this integration, AI assistants remain limited to the data they were trained on, unable to perform meaningful real-world actions.

At the heart of this challenge lies a fundamental question: how should AI models communicate with external systems? Two leading approaches have emerged in the market—OpenAI’s Responses API and Anthropic’s Model Context Protocol (MCP). While both aim to solve similar problems, they take notably different architectural approaches that reflect deeper philosophical differences about how AI should interact with the world.

The choice between these platforms isn’t trivial. It affects everything from developer experience and performance to security, cost, and long-term viability. This guide examines the key differences and offers insights to help you decide which solution best fits your AI integration strategy.

Core Concepts of OpenAI Responses API and MCPUnderstanding OpenAI’s Responses API The Responses API represents OpenAI’s evolution from their earlier APIs. It merges the simplicity of Chat Completions with more powerful capabilities previously segregated in the Assistants API, providing developers with a streamlined way to build AI agents.

The Responses API represents OpenAI’s evolution from their earlier APIs. It merges the simplicity of Chat Completions with more powerful capabilities previously segregated in the Assistants API, providing developers with a streamlined way to build AI agents.

OpenAI’s Responses API compared to Chat Completions is designed to simplify AI integration, allowing direct incorporation of OpenAI’s models with built-in tools like web search and computer use. This shift reduces the complexity once required to achieve similar functionality.

Key features of the Responses API include:

- Simplified interfaces for handling multi-turn conversations

- Built-in tools that provide the model with up-to-date information

- Function calling capabilities for seamless integration with external services

- Streamlined error handling and response formatting

Understanding Anthropic’s Model Context Protocol (MCP) Anthropic’s Model Context Protocol takes a fundamentally different approach, functioning as a standardized protocol—think of it as a “USB-C port” for AI applications, as described in the comprehensive guide to MCP origins and functionality.

Anthropic’s Model Context Protocol takes a fundamentally different approach, functioning as a standardized protocol—think of it as a “USB-C port” for AI applications, as described in the comprehensive guide to MCP origins and functionality.

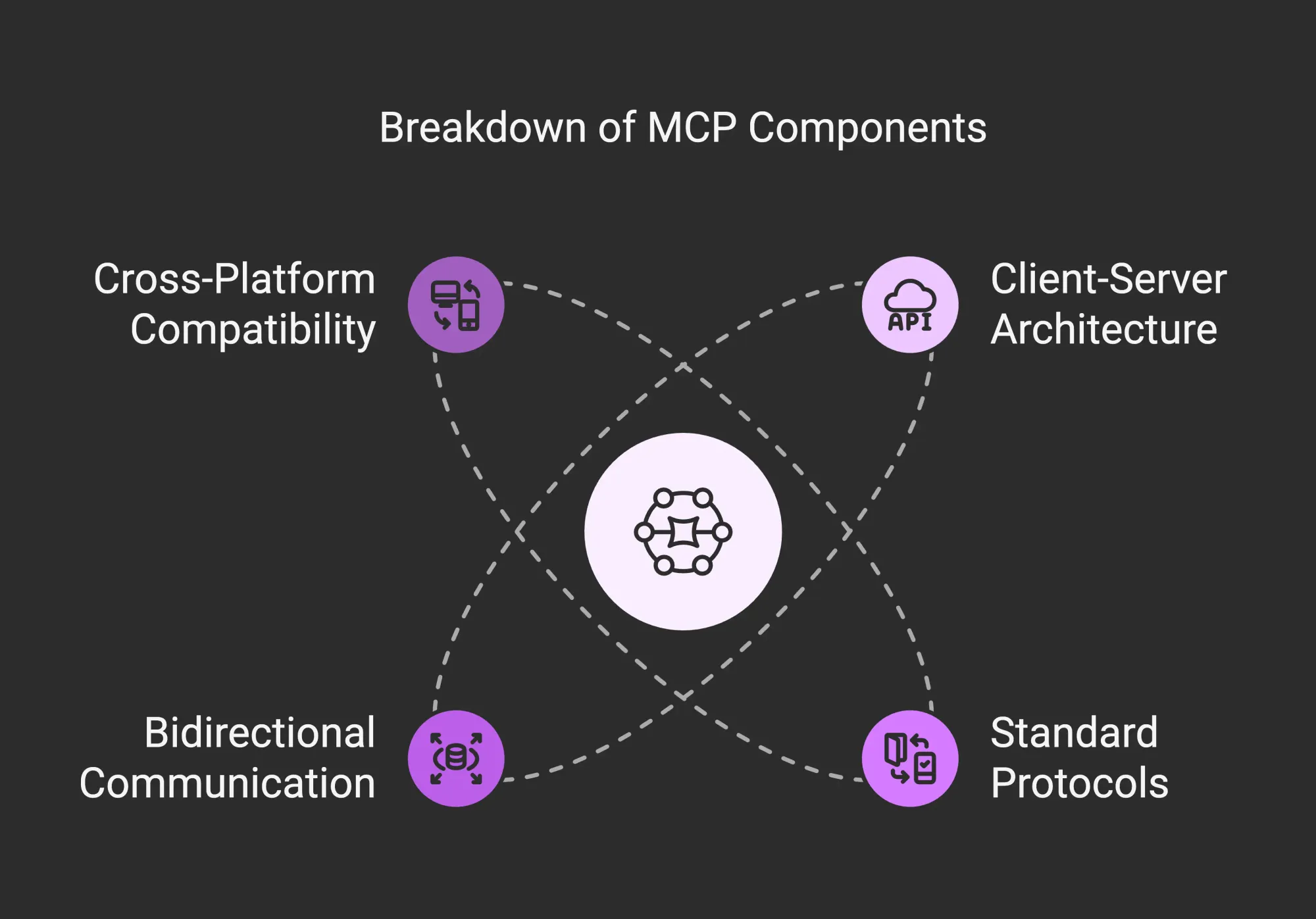

MCP establishes a client-server architecture where an AI-powered application (the client) connects to various MCP servers, each providing access to specific tools or data sources. This abstraction layer between the AI model and external resources can enable greater interoperability and flexibility.

MCP’s core components include:

- A client-server architecture for connecting AI with external resources

- Standard protocols for exchanging prompts, resources, and tools

- Bidirectional communication between AI models and data sources

- Cross-platform compatibility across different AI providers

Philosophical Differences in Integration Approaches These divergent approaches reflect deeper philosophical differences:

These divergent approaches reflect deeper philosophical differences:

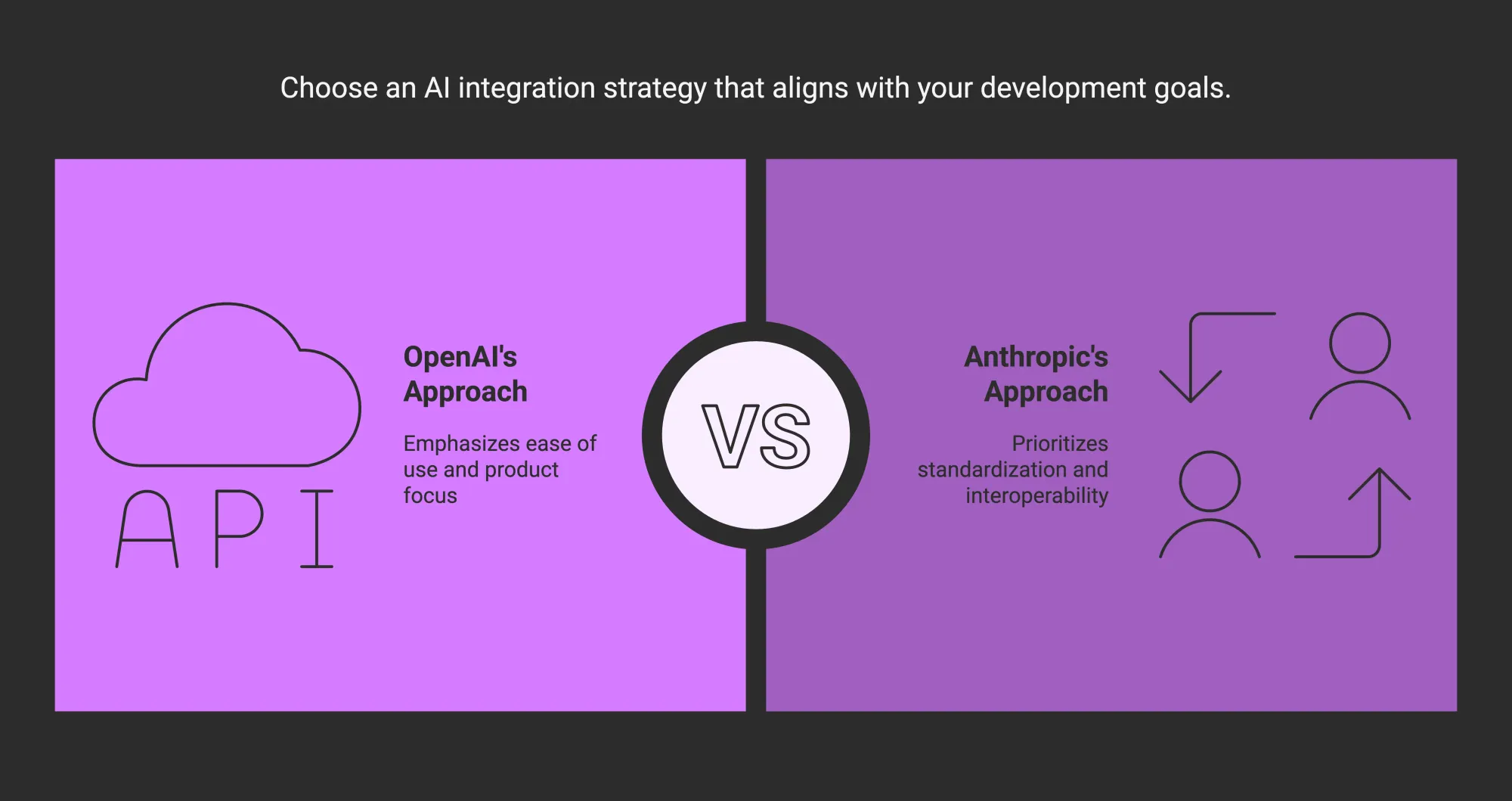

- OpenAI’s approach is more centralized and product-focused, emphasizing ease of use through tight integration with their specific models and tools.

- Anthropic’s approach is more decentralized and protocol-focused, prioritizing standardization and interoperability.

These differences shape how developers integrate AI with external systems and factor into long-term strategic decisions.

Technical Architecture InsightsComparing Tool Integration ModelsOpenAI’s Function Calling MechanismThe Responses API relies on a function calling mechanism that allows the AI to determine when and how to call functions based on user intent. As detailed in OpenAI’s function calling documentation, developers define functions with JSON schemas, the LLM decides when to invoke them, and the function returns structured data for continued conversation.

This approach lets the model choose which tools to use and when, making interactions more natural and reducing the need for explicit user instructions.

MCP’s Standardized ProtocolAnthropic’s MCP defines a standard protocol for communication between AI models and external resources. This produces a cleaner separation between the model and the systems it uses, potentially making it easier to integrate with a wide range of tools. An external comparison of MCP and OpenAI’s offerings highlights MCP’s flexibility in heterogeneous environments.

Context Window Management in AI AgentsManaging Context LimitationsOpenAI focuses on efficient token usage to maximize the available context window. Understanding context windows in OpenAI models is crucial for developers aiming to maintain coherent conversations and reference relevant information.

Anthropic emphasizes structured knowledge representation, as outlined in context windows in Claude. This can help organize information more effectively within the available context.

Conversation Memory StrategiesOpenAI’s Responses API offers built-in conversation state management, while MCP leverages its client-server model to maintain state outside the main model, potentially improving context control for complex applications.

Implications for Multi-turn InteractionsOpenAI’s simpler conversation approach can be advantageous for straightforward applications. MCP’s structured model may offer more fine-grained control over context, benefiting projects with intricate multi-turn dialogs.

Programming Language and SDK SupportAvailable SDKs and CompatibilityOpenAI provides official SDKs in Python, Node.js, and .NET, simplifying tasks like authentication and error handling. Anthropic offers SDKs for MCP primarily in Python and JavaScript/TypeScript. Both approaches streamline integration in popular programming environments.

Language Support ComparisonOpenAI’s coverage is generally broader, though MCP’s protocol-based design can be implemented in any language supporting HTTP and JSON. The choice often depends on existing infrastructure and developer preferences.

Effects on Development WorkflowOpenAI’s approach can feel more familiar to those used to REST APIs, while MCP requires learning a protocol-based model. MCP can reward that extra effort with potential gains in flexibility and interoperability.

Developer Experience and IntegrationSDK Quality AnalysisOpenAI’s documentation is noted for clarity, offering detailed guides and examples. Anthropic’s MCP documentation explains the underlying protocol and architectural concepts thoroughly, focusing on how to leverage standardization.

Examples from both platforms illustrate specific use cases. OpenAI’s tend to be more task-oriented, while Anthropic’s focus on protocol capabilities.

Learning Curve ComparisonOpenAI’s Responses API is approachable, leveraging familiar REST patterns. MCP’s protocol-based system may take longer to master but can be more adaptable for complex implementations.

Integration Complexity AssessmentOpenAI requires minimal boilerplate for basic functionality. MCP typically demands more initial setup to establish client-server communication, which can be beneficial in large-scale or specialized projects.

Implementation Time EstimatesDevelopers report simple OpenAI integrations can often be completed in hours or days, while MCP might require longer initial development but potentially offers more streamlined control once everything is established.

Performance and ScalabilityResponse Time BenchmarksOpenAI often achieves latencies between milliseconds and a few seconds, depending on model and prompt size. MCP may add overhead due to extra client-server communication, though optimizations can mitigate this.

Developers should monitor token count, network latency, and load to maintain consistent performance. Reducing hidden costs in function calling offers strategies to manage potential inefficiencies.

Throughput CapabilitiesOpenAI and Anthropic both enforce rate limits and quotas, with higher tiers available for enterprise customers. MCP’s distributed model can help scale requests, though any underlying service still faces overall rate limitations.

Security and ComplianceData Protection FeaturesBoth platforms use industry-standard TLS for data in transit and encryption at rest. Access control generally relies on API keys or tokens, with additional organization-level controls in place for enterprise tiers.

Regulatory ComplianceOpenAI supports GDPR compliance, HIPAA eligibility, and SOC 2 certification. Anthropic offers similarly robust compliance measures, although specifics can depend on your service tier or contractual arrangement.

Risk Assessment FrameworkOpenAI’s centralized approach can be more straightforward to secure as a single entity, while MCP’s distributed architecture may allow finer control over each connection. Both platforms have strong vulnerability management procedures.

Cost Analysis and Budget PlanningPricing Structure ComparisonOpenAI and Anthropic primarily use token-based pricing. Volume discounts may be available for large-scale implementations, but it’s important to watch for hidden costs related to development, infrastructure, or function calling overhead.

Total Cost of Ownership (TCO) CalculationOpenAI typically requires less upfront development time, while MCP might reduce ongoing complexity for highly specialized or large-scale setups. Factoring in maintenance and scaling needs is crucial when planning your budget.

Budget Optimization StrategiesOptimizing prompts to reduce token usage, caching frequent queries, and choosing lower-cost models for simpler tasks can all help manage expenses for either platform. Balancing performance requirements with budget constraints is key.

Use Cases and Real-World ApplicationsIndustry-Specific ImplementationsHealthcare, finance, e-commerce, and enterprise knowledge management all benefit differently from these platforms. Compliance needs and integration complexity can shape the choice, as OpenAI’s simpler approach may suffice for straightforward applications, while MCP’s structure suits intricate data flows.

Task-Specific CapabilitiesWhether you need chatbot-style conversational AI, document analysis, multi-modal interactions, or autonomous agents, both platforms provide mechanisms to connect with relevant tools. The preferred solution often depends on your project’s technical scope and integration demands.

Long-term Viability and Strategic ConsiderationsVendor Lock-in AssessmentOpenAI’s proprietary APIs may create some lock-in, while MCP’s protocol-based design can ease integration with multiple AI models. However, migrating between platforms generally requires architecture changes.

Future Roadmap ComparisonBoth OpenAI and Anthropic continue to innovate. OpenAI emphasizes broad applicability and frequent feature releases, whereas Anthropic often focuses on safety, context handling, and deep protocol development.

Ecosystem AnalysisThe broader AI ecosystem around each platform influences tool availability, community support, and partner networks. While OpenAI enjoys a large developer base and widespread third-party integrations, MCP’s protocol-centric approach is attracting diverse collaborators as well.

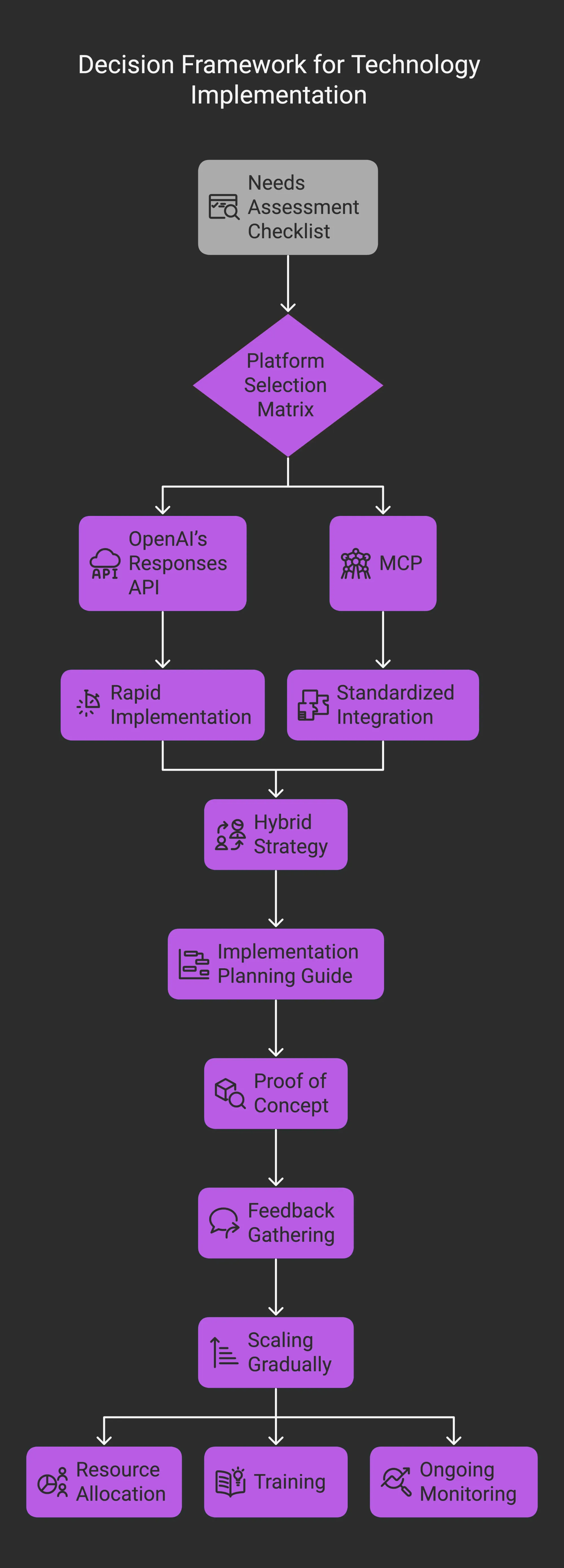

Decision Framework: Making the Right Choice Needs Assessment ChecklistEvaluate technical requirements, performance needs, security obligations, and programming language preferences. Budget constraints and plans for future growth should also shape your decision.

Needs Assessment ChecklistEvaluate technical requirements, performance needs, security obligations, and programming language preferences. Budget constraints and plans for future growth should also shape your decision.

Platform Selection MatrixChoose OpenAI’s Responses API if you want rapid implementation, strong documentation, and built-in tools. Consider MCP if you require standardized integration for complex or high-volume environments and value flexibility in connecting multiple AI systems.

A hybrid strategy can also be effective, using each platform where it excels.

Implementation Planning GuideStart with a proof of concept, gather feedback, and scale gradually. Proper resource allocation, training, and ongoing monitoring are essential to optimize performance and control costs over the long term.

ConclusionSummary of Key DifferencesOpenAI emphasizes centralized ease of use and tighter integration, while Anthropic’s protocol-based MCP fosters standardization and decentralized flexibility. Both offer powerful capabilities, yet differ in how they handle context, tool integration, scalability, and security.

The Evolving Landscape of AI Agent PlatformsAs the industry advances, both providers regularly release new features. Best practices continue to emerge around AI models, tool integration, and data management, so staying informed is critical.

Final RecommendationsBefore choosing, clarify your organization’s current and future AI needs. Weigh factors such as cost, developer experience, security, and scalability. By aligning these elements with each platform’s strengths, you can confidently select the best agent platform for your use case.

By thoughtfully evaluating your integration goals, you’ll find a solution—whether OpenAI’s Responses API or Anthropic’s MCP—that effectively powers your AI initiatives.