OpenAI’s Revolutionary Responses API: A New Era in AI Agent DevelopmentThe AI landscape has been rapidly evolving, with each advancement bringing us closer to truly intelligent systems. Among these developments, OpenAI’s new Responses API (also referred to as its Agents API) stands out as a pivotal innovation that’s fundamentally changing how developers build and deploy AI agents.

For years, developers working with agentic AI models have had to navigate a complex environment of separate API calls, custom orchestration logic, and intricate prompt engineering to create even moderately intelligent agents. But now, with the introduction of the Responses API, OpenAI has dramatically simplified this process while simultaneously expanding what’s possible.

An important aspect of this development is that the Responses API is not just another addition to OpenAI’s offerings—it’s slated to replace the existing Assistants API. OpenAI has announced plans to deprecate the Assistants API in the first half of 2026, providing developers with a 12-month transition period after the official announcement. This strategic shift underscores the significance of the Responses API and makes understanding its capabilities especially crucial for developers currently using the Assistants API or planning new AI agent implementations.

In this comprehensive guide, you’ll discover what makes this platform revolutionary, how it works under the hood, and why it represents such a significant leap forward for AI agent development. Whether you’re already immersed in creating autonomous systems or just exploring the possibilities, understanding OpenAI’s Responses API will give you valuable insights into where AI technology is headed.

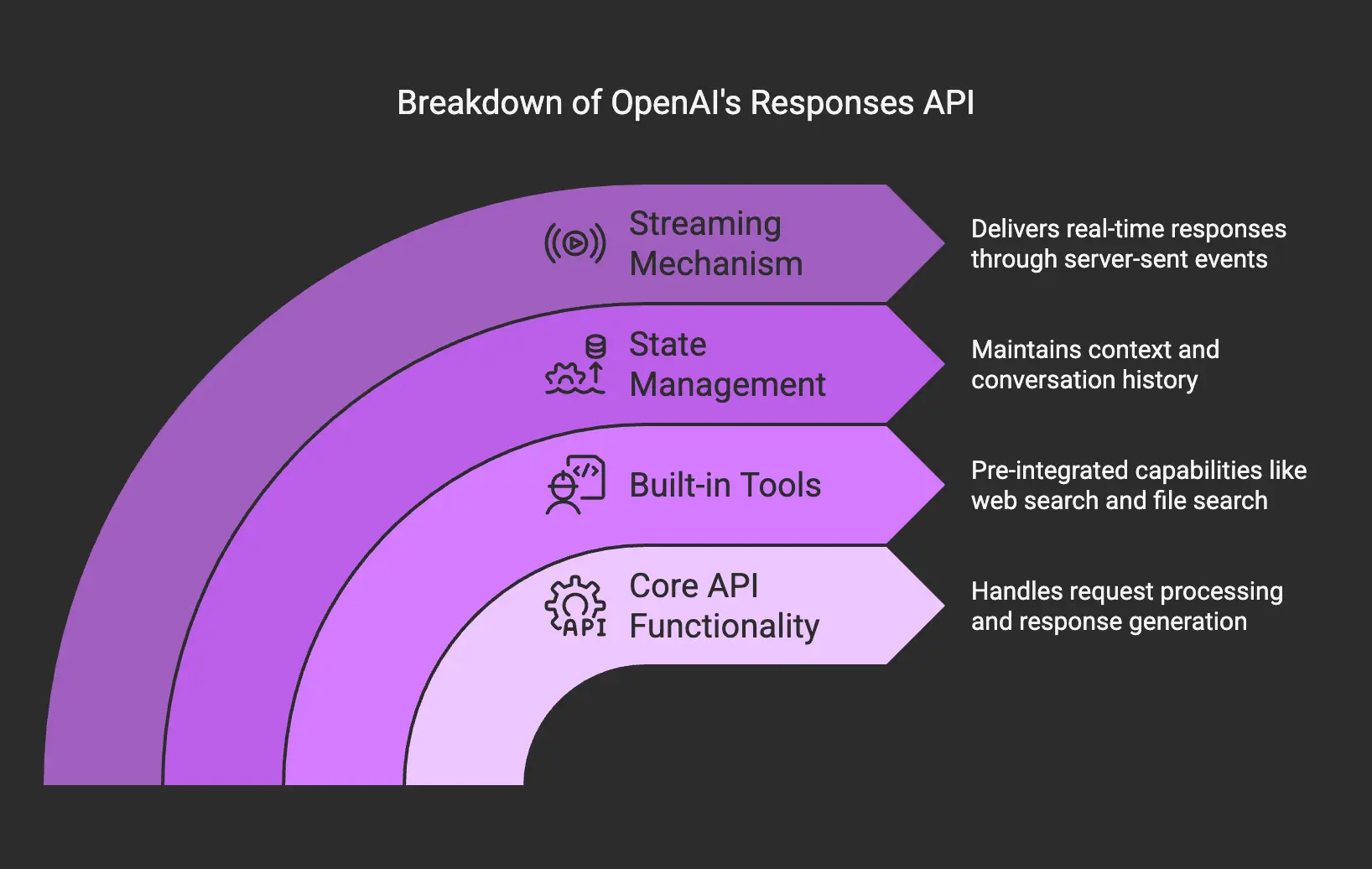

Understanding the Architecture and Key Components of OpenAI’s Responses APIAt its core, the Responses API serves as a powerful bridge between OpenAI’s advanced language models and practical, real-world applications. It essentially combines the simplicity of the Chat Completions API with the more advanced tool-using capabilities that were previously available through the Assistants API.

The API follows a RESTful architecture, supporting both standard HTTP requests and real-time streaming of responses. This design choice enables developers to receive immediate feedback and create more interactive user experiences—a crucial factor for applications requiring responsiveness.

Key Components and API Structure The Responses API consists of several key components:

The Responses API consists of several key components:

What makes this structure special is how it simplifies what was previously complex. Key differences between Responses API and Chat Completions include a more intuitive interface for multi-tool usage and better handling of conversation state. Unlike the Chat Completions API, which requires developers to manage history manually, the Responses API does this automatically—making it much easier to create sophisticated conversational experiences.

Integration with the Open-Source Agents SDKOne of the most powerful aspects of the Responses API is its seamless integration with the open-source Agents SDK. This combination allows developers to build AI agents that can:

The Agents SDK provides building blocks that simplify agent creation, bringing AI-driven development into reach for a much broader range of developers.

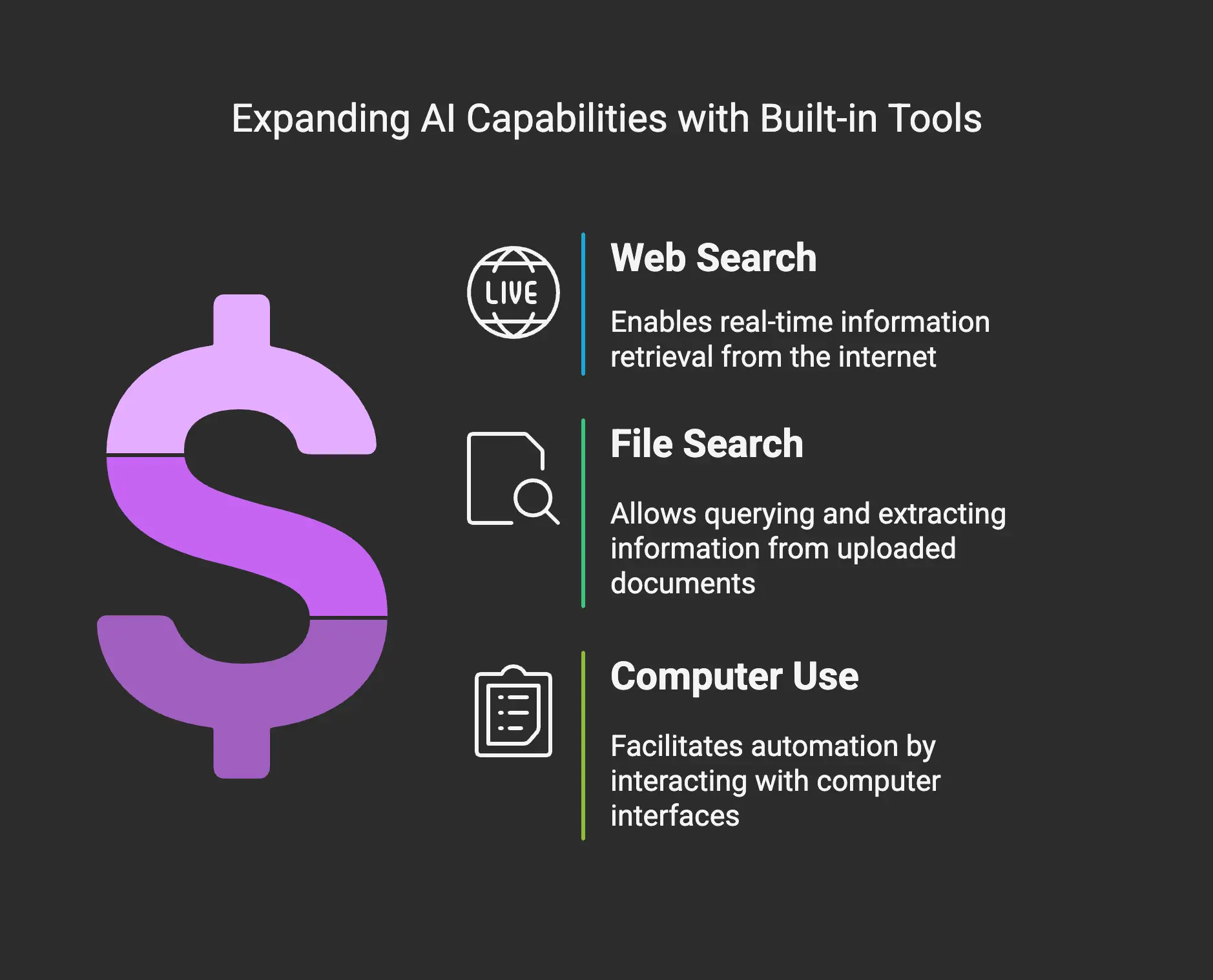

Revolutionary Built-in Tools: Expanding AI Capabilities What truly sets the Responses API apart from previous offerings are its built-in tools. These pre-integrated capabilities dramatically expand what AI models can do without requiring complex custom integrations or separate function calls.

What truly sets the Responses API apart from previous offerings are its built-in tools. These pre-integrated capabilities dramatically expand what AI models can do without requiring complex custom integrations or separate function calls.

Web Search: Real-time Information AccessThe web search capability enables AI agents to retrieve up-to-date information from the internet, addressing one of the most significant limitations of traditional language models—their inability to access real-time data. This is particularly valuable for applications requiring current information, such as news analysis, research assistance, or market intelligence.

The implementation is straightforward. With just a few parameters, developers can enable AI agents to search the web and incorporate results into their responses. Implementing web search capabilities is well-documented, with OpenAI reporting that their web search tool achieves a 90% accuracy score on their SimpleQA benchmark.

File Search: Knowledge Base QueryingThe file search tool empowers AI agents to query and extract information from documents uploaded to the system. This transforms how businesses can interact with proprietary information, enabling natural language queries against company documentation, research papers, knowledge bases, and more.

File search implementation supports multiple file formats and includes features like reranking search results, attribute filtering, and query rewriting. It’s not just about finding relevant documents—it’s about extracting the specific information needed to answer a question or perform a task.

Computer Use: Automating TasksPerhaps the most groundbreaking built-in tool is the computer use capability, which allows AI agents to interact with computer interfaces by generating mouse and keyboard actions. Still in research preview, this feature opens up entirely new possibilities for automation by enabling AI to:

The computer use tool has shown promising results in testing, achieving a 38.1% success rate on OSWorld for full computer tasks and even higher rates (58.1% on WebArena and 87% on WebVoyager) for web-based interactions.

The Technical Foundations Behind These ToolsWhat makes these tools particularly impressive is their direct integration into the API. Unlike traditional approaches that require separate systems for different capabilities, the Responses API provides a unified interface for all these tools. This integration is achieved through:

The result is a simplified development experience that doesn’t sacrifice power or flexibility.

Why the OpenAI Responses API Is Transformational for DevelopersThe impact of the Responses API on AI development can’t be overstated. It represents a fundamental shift in how developers can create and deploy AI agents, making what was once complex and time-consuming dramatically more accessible.

Simplified Development WorkflowsBefore the Responses API, creating AI agents that could interact with external data sources or perform complex tasks required extensive custom development. Developers had to:

The Responses API eliminates much of this complexity. Tasks that once required hundreds of lines of code can now be accomplished with just a few API calls, making agentic AI development accessible to a wider range of practitioners, not just specialized experts.

Enhanced Contextual UnderstandingThe API’s ability to maintain context across interactions represents a major advancement. Building AI agents with OpenAI now involves less manual state management, as the API handles the complexities of conversation flow. This results in more natural and coherent interactions, with the AI building on previous exchanges without losing track.

Improved Adaptability and LearningThe Responses API facilitates continuous learning and adaptation. AI agents built with this approach can refine their behavior based on interactions and feedback, becoming more effective over time. This adaptability is crucial for dynamic environments and evolving user needs.

Seamless Integration with Existing SystemsAnother major advantage is how easily the Responses API integrates with existing systems and workflows. Its design makes it straightforward to incorporate AI capabilities without significant architectural changes. This is particularly valuable for enterprises looking to enhance current applications rather than replace them completely.

Building Your First AI Agent with OpenAI’s Responses APINow that we understand the transformative potential of this platform, let’s explore how to implement it in practice.

Setting Up Your Development EnvironmentBefore you can start building with the Responses API, you’ll need to set up your environment:

OpenAI client libraries are available for multiple programming languages, including Python, JavaScript, and Ruby, making it accessible regardless of your tech stack.

Authentication and API AccessAuthentication with the Responses API uses standard API key authentication, with some updates from the older Chat Completions implementation. Here’s a basic example of how to set up authentication in Python:

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4o",

input="Write a one-sentence bedtime story about a unicorn."

)

print(response.output_text)

Code Examples: Creating a Basic AI AgentBelow is a simple example of using the Responses API to let an AI agent search the web for information:

Example using the Responses API with web search

response = client.responses.create(

model="gpt-4o",

tools=[{"type": "web_search_preview"}],

input="What were the key announcements at OpenAI's recent Dev Day?"

)

print(response.text)

print(response.sources) # This will show the web sources used

Practical code examples for the Responses API demonstrate how to implement various capabilities, including multi-tool usage and more sophisticated agent orchestration. These examples provide a solid foundation for your own custom implementations.

Testing and Debugging Your ImplementationWhen working with the Responses API, effective testing and debugging are essential:

AI responses can be unpredictable, so thorough testing across a range of inputs is crucial for robust applications.

Advanced Implementation Patterns and StrategiesOnce you’re comfortable with the basics, you can adopt more sophisticated implementation patterns that make full use of the Responses API’s capabilities.

Multi-Tool and Multi-Model Integration TechniquesOne of the most powerful features is combining multiple tools in a single AI agent. For example, you could create an agent that:

This multi-tool integration enables comprehensive solutions that handle complex workflows end-to-end.

Orchestrating Complex AI Agent WorkflowsFor more sophisticated applications, you might need to orchestrate multiple AI agents working together. The Agents SDK helps through features like:

These orchestration capabilities allow for the creation of AI systems that can handle multi-step processes while maintaining reliability and safety.

Managing Rate Limits and Performance OptimizationWhen working with the Responses API at scale, managing rate limits and optimizing performance become critical. Optimizing OpenAI API performance involves several strategies:

Performance optimization not only improves user experience but also helps manage operational expenses.

Cost-Effective Implementation StrategiesDeveloping cost-effective strategies is important for sustainable AI agent development. Consider:

By carefully managing these factors, you can create powerful AI agents that remain economically viable at scale.

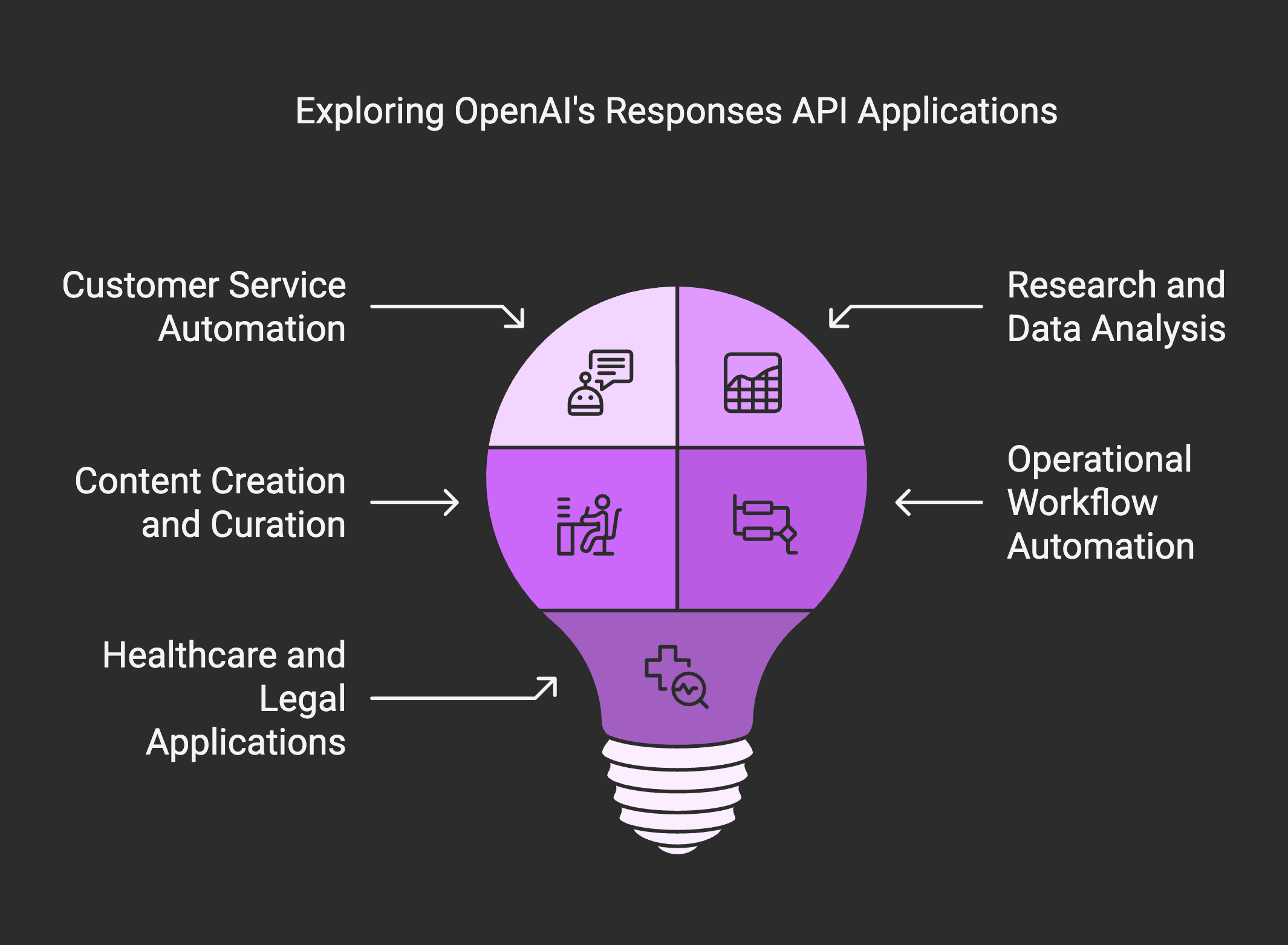

Real-World Applications and Use Cases for OpenAI’s Responses API The flexibility and power of this platform enable a wide range of real-world applications across industries.

The flexibility and power of this platform enable a wide range of real-world applications across industries.

Customer Service AutomationAI agents built with the Responses API can transform customer service by:

This significantly reduces response times, improves customer satisfaction, and allows human agents to focus on more complex issues.

Research and Data AnalysisIn research and data analysis, the Responses API enables AI assistants that can:

These capabilities are particularly valuable in fields like market research, scientific research, and competitive intelligence.

Content Creation and CurationContent creators can leverage the Responses API to build AI agents that assist with:

Such applications can dramatically boost content production efficiency and maintain quality.

Operational Workflow AutomationThe most transformative applications often involve operational workflow automation, where AI agents can:

Industry impact of OpenAI’s Responses API is particularly evident here, where routine tasks become automated and human workers are freed to focus on higher-value activities.

Healthcare and Legal ApplicationsSpecialized domains like healthcare and legal services also benefit from AI agents powered by the Responses API:

While these use cases require careful attention to privacy, security, and accuracy, they demonstrate the broad applicability of the Responses API.

Enterprise Adoption: Integration and Scaling ConsiderationsFor enterprises considering adoption, several key factors come into play.

Security Best Practices and ComplianceSecurity is paramount when implementing AI systems, especially those interacting with sensitive data or critical systems. Key security practices include:

Compliance with regulations like GDPR or HIPAA is also essential, particularly for sensitive information.

Scaling Your Responses API ImplementationAs usage grows, scaling becomes crucial. Production best practices for the Responses API include:

These strategies help ensure consistent performance as adoption expands.

Cost Management for Large-Scale DeploymentsAt scale, cost management becomes increasingly important. Effective strategies include:

Proactive cost management ensures the long-term viability of large-scale deployments.

Migration Strategies from Assistants APIFor organizations currently using the Assistants API, migration to the Responses API is an important consideration. OpenAI plans to deprecate the Assistants API in the first half of 2026, with a 12-month support window following the announcement.

Effective migration strategies include:

Early planning ensures a smooth transition with minimal disruption to existing workflows.

Performance Benchmarks and MetricsUnderstanding the performance characteristics of the Responses API is essential for effective implementation.

Response Times and Latency ConsiderationsPerformance can vary based on factors such as:

Typical latencies range from 500ms to 3000ms. Knowing these latencies is key when designing responsive applications.

Accuracy and Reliability MetricsThe Responses API’s accuracy is impressive but not infallible:

Continuous monitoring and validation remain important for mission-critical applications.

Comparative Analysis with Alternative ApproachesCompared to alternatives like custom-built AI agents or competing APIs, the Responses API generally offers:

However, specialized solutions may outperform it for narrowly focused tasks where custom optimization is possible.

Optimizing for Different Performance VariablesDepending on your application needs, you may need to optimize for:

Striking the right balance among these variables is key to successful implementation.

Privacy and Data ProtectionPrivacy considerations are crucial, especially when using features like web search and file search involving potentially sensitive data. Best practices include:

Such measures help protect users and build trust in AI-driven solutions.

The Future Roadmap and Potential for the Agents APILooking ahead, the Responses API marks only the beginning of a new era in AI agent development.

Upcoming Features and EnhancementsOpenAI continues to develop and enhance the Responses API, with improvements on the horizon:

These enhancements will broaden the API’s scope and effectiveness.

The Vision for Autonomous AI Agents OpenAI’s vision for AI agents extends beyond current capabilities, aiming to create systems that can:

OpenAI’s vision for AI agents extends beyond current capabilities, aiming to create systems that can:

This points toward a future where software evolves into autonomous, continuously learning entities.

How Responses API Fits into the Broader AI EcosystemThe Responses API is part of a larger ecosystem of AI technologies. Its role includes:

This context can guide strategic decisions about AI adoption and technology investments.

Preparing for the Future of AI Agent DevelopmentTo prepare for tomorrow’s agentic AI landscape, organizations and developers should:

By doing so, you’ll be ready to capitalize on the transformative potential of intelligent, autonomous agents.

Conclusion: Begin Your AI Agent Journey with OpenAI’s Responses APIThe OpenAI Responses API represents a major leap forward, making sophisticated AI agent development more accessible than ever. Its built-in tools, simplified workflows, and seamless integrations open doors to creating intelligent, autonomous systems that can handle a diverse array of tasks.

Key Takeaways and Implementation ChecklistAs you begin working with the Responses API, remember these essential points:

These considerations will help ensure a successful deployment.

Resources for Further Learning and SupportFor deeper insights and practical guidance, explore these resources:

Community and Developer EcosystemKeep an eye out for the growing community around the Responses API which will be a rich source of knowledge and support. This can offer:

Active participation can significantly enhance your AI development experience.

Taking the Next Steps with Responses APIReady to move forward? Here’s how to get started:

The journey toward building advanced AI agents is just beginning, and the Responses API provides an excellent foundation. Whether you’re enhancing existing software or creating entirely new AI-driven experiences, the potential for innovation is immense.

For more detailed guidance on migration strategies, implementation guides, and SDK integration, check out OpenAI’s comprehensive resources.