Powerful AI Agents You Can Build With OpenAI's Responses API

Discover how to leverage OpenAI’s Responses API to build innovative AI agents that automate tasks, deliver personalized support, and offer expert advice. Explore practical use cases and best practices to transform your digital solutions.

Introduction to Building AI Agents

The arrival of OpenAI's Responses API marks a significant shift in how developers can create AI agents. Unlike previous API iterations that required complex orchestration and prompt engineering, the Responses API provides a unified interface that drastically simplifies building sophisticated AI systems while expanding their capabilities.

This API combines the straightforward approach of the Chat Completions API with advanced functionalities like web search, file search, and computer use automation. It enables developers to create AI agents that can interact with the digital world, access up-to-date information, and perform complex tasks with minimal code.

The transformative potential of the Responses API extends beyond just technical advancement. It opens up possibilities for businesses to automate workflows, enhance customer experiences, and gain competitive advantages through AI-powered solutions previously achievable only by teams with specialized expertise.

In this guide, we'll explore various types of AI agents you can build with the Responses API, from task automation assistants to expert advisory systems. We'll also cover implementation approaches, best practices, and strategies for optimizing performance and security. Whether you're looking to enhance existing applications or create entirely new AI-driven experiences, this guide will provide the insights you need.

Responses API Fundamentals

Before diving into specific agent types, it’s important to understand what makes the Responses API such a powerful foundation for AI development. At its core, the API provides a standardized way to interact with OpenAI's language models while leveraging built-in tools that extend the models’ capabilities.

Core Capabilities and Architecture

The Responses API represents a significant evolution from previous OpenAI offerings. Unlike the Chat Completions API, which focuses primarily on generating text responses, the Responses API is designed specifically for building agents that can take actions and interact with external systems.

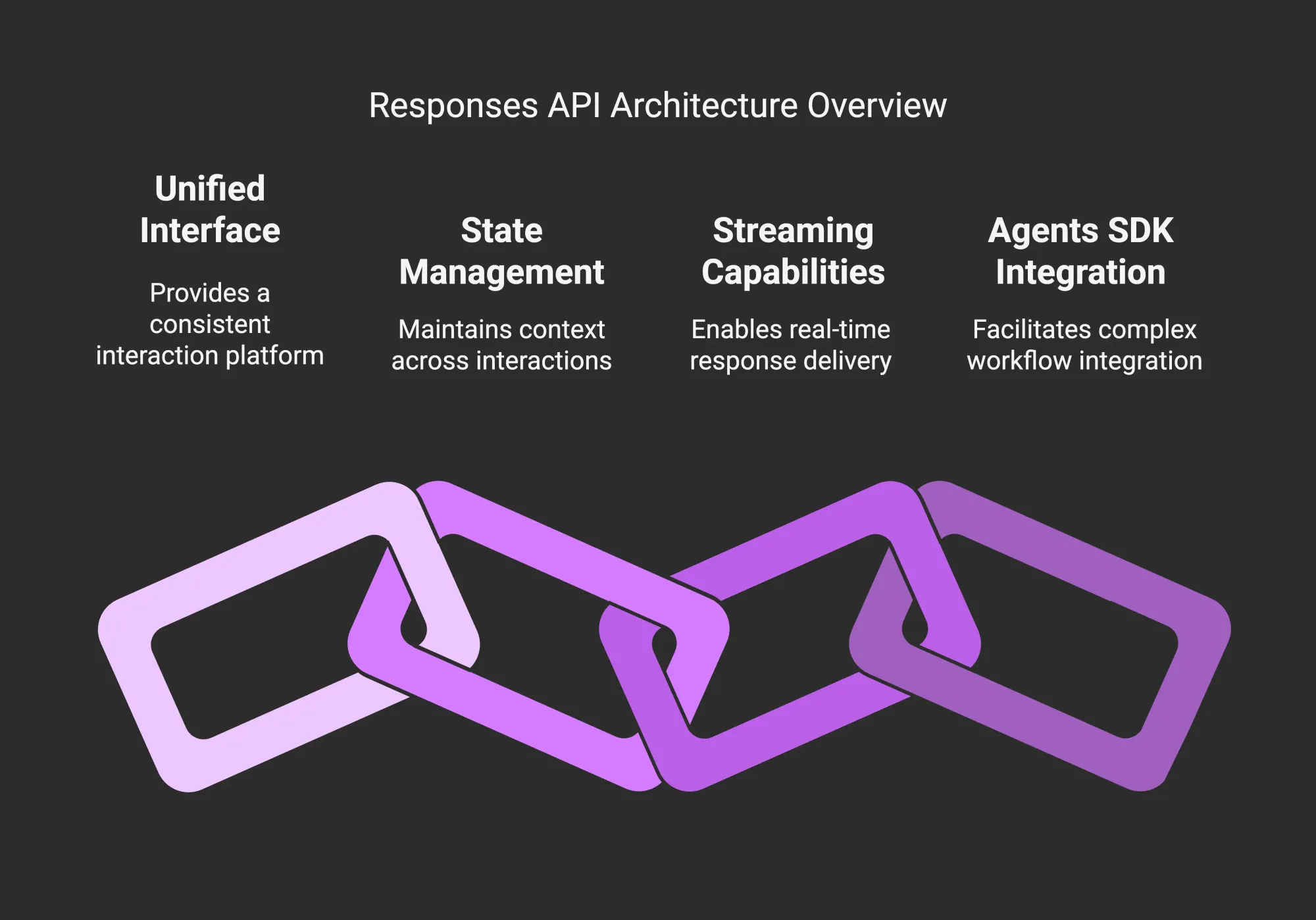

Key architectural components include:

- A unified interface for model interactions and tool usage

- Built-in state management for maintaining context

- Streaming capabilities for real-time responses

- Integration with the open-source Agents SDK for more complex workflows

This architecture significantly reduces the development complexity previously associated with building AI agents, making advanced capabilities accessible to a broader range of developers.

Available Built-in Tools

What truly sets the Responses API apart are its built-in tools for building agents that dramatically expand what AI models can do without requiring complex custom integrations:

- Web Search: Enables agents to retrieve up-to-date information from the internet, addressing the limitation of outdated training data.

- File Search: Allows agents to search through documents and extract relevant information, making knowledge bases and proprietary content accessible via natural language.

- Computer Use: Provides agents with the ability to interact with computer interfaces by generating mouse and keyboard actions (currently in research preview).

These tools transform what’s possible with AI agents, enabling them to access real-time data, interact with your organization's knowledge base, and even automate tasks across computer systems.

Key Advantages Over Previous APIs

The Responses API offers several significant advantages over earlier approaches:

- Simplified development workflows that reduce boilerplate code

- Integrated tool usage without complex orchestration

- Automatic context management for more coherent conversations

- Reduced latency through optimized request handling

- Support for both synchronous and streaming response modes

These advantages make building AI agents more accessible while simultaneously expanding what those agents can accomplish.

Setting Up Your Development Environment

To start working with the Responses API, you'll need:

- An OpenAI account with API access

- API keys with appropriate permissions

- The OpenAI client library for your preferred programming language

- A basic understanding of RESTful API concepts

With these fundamentals in place, you’re ready to explore the specific types of agents you can build with this platform.

Building Task Automation Agents

Task automation agents represent one of the most valuable applications of the Responses API. These agents can handle repetitive workflows, process information, and interact with digital systems to complete specific tasks with minimal human intervention.

Definition and Core Capabilities

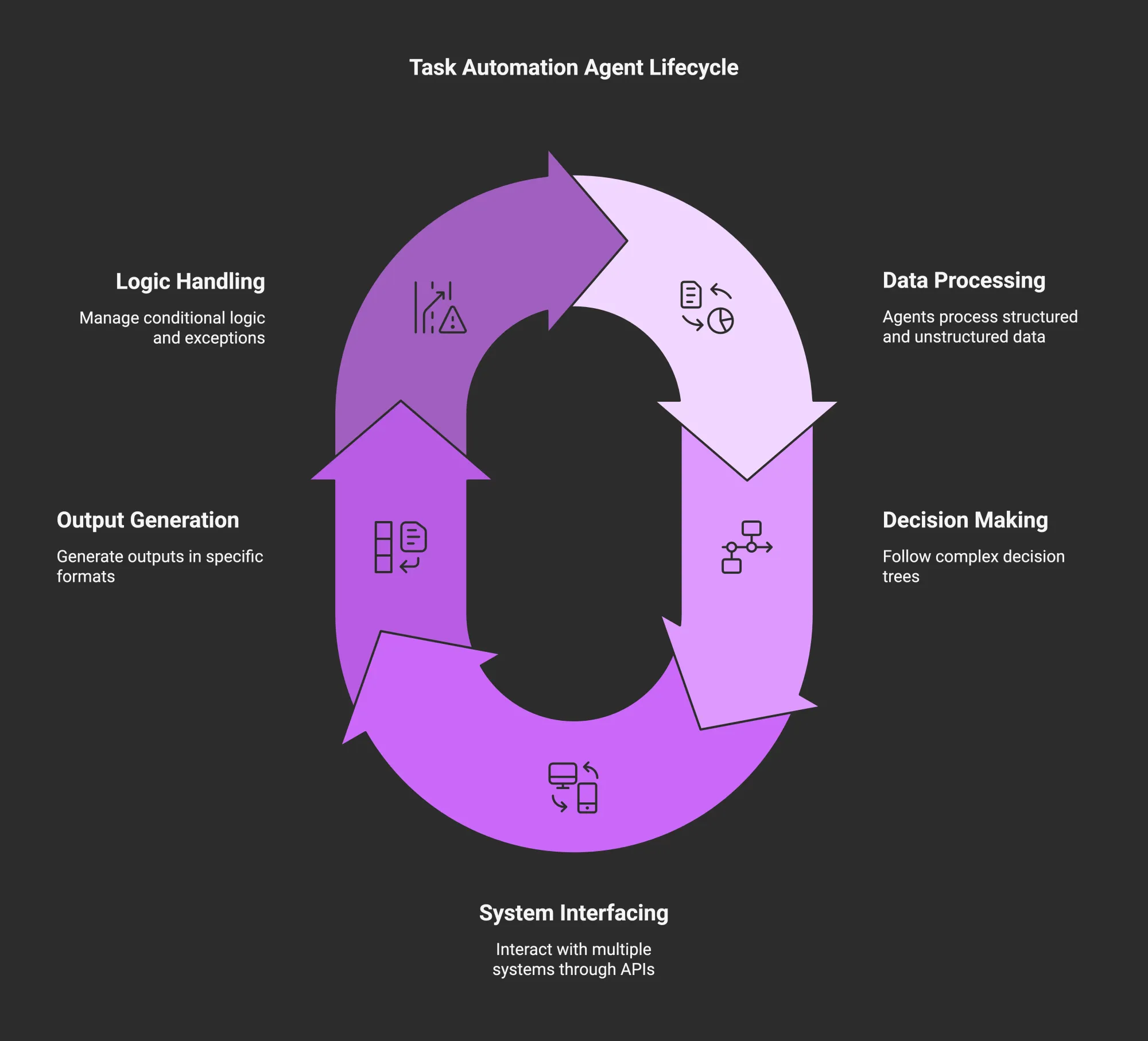

Task automation agents are AI systems designed to execute defined workflows or sequences of operations. They excel at:

- Processing structured and unstructured data

- Following complex decision trees

- Interfacing with multiple systems through APIs

- Generating outputs in specific formats

- Handling conditional logic and exception scenarios

What makes them particularly powerful with the Responses API is the ability to customize AI agents for specific business processes, adapting them to your organization's unique workflows and requirements.

Real-World Applications

Task automation agents can transform many business operations:

- Data Entry and Processing: Extracting information from documents, forms, or emails and entering it into relevant systems.

- Workflow Automation: Moving tasks through approval processes, triggering notifications, and managing status updates.

- Report Generation: Compiling data from multiple sources and creating standardized reports on a schedule.

- Calendar Management: Scheduling appointments, sending reminders, and resolving scheduling conflicts.

These applications can significantly reduce manual effort while improving consistency and reducing error rates.

Implementation Approach

Building effective task automation agents requires:

- Clearly defining the task’s scope and boundaries

- Identifying decision points and required inputs

- Determining appropriate tool usage (web search, file search, computer use)

- Implementing error handling and fallback mechanisms

- Testing extensively with varied inputs and edge cases

Here’s a simplified example of how you might structure a task automation agent using the Responses API:

// Example code for a document processing agent

const response = await client.responses.create({

model: "gpt-4o",

tools: [

{ type: "file_search" },

{ type: "web_search" }

],

input: `Extract the key financial figures from the Q3 report and compare them

with industry benchmarks. Format the results as a bulleted summary.`

});

Developing Information Retrieval & Research Agents

Information retrieval and research agents represent one of the most powerful applications of the Responses API. These agents can gather, synthesize, and present information from various sources, providing users with comprehensive answers to complex questions.

Building Information Gathering Agents

Information retrieval agents excel at searching for, filtering, and synthesizing information from diverse sources. The Responses API makes building these agents more straightforward through its built-in web search capabilities.

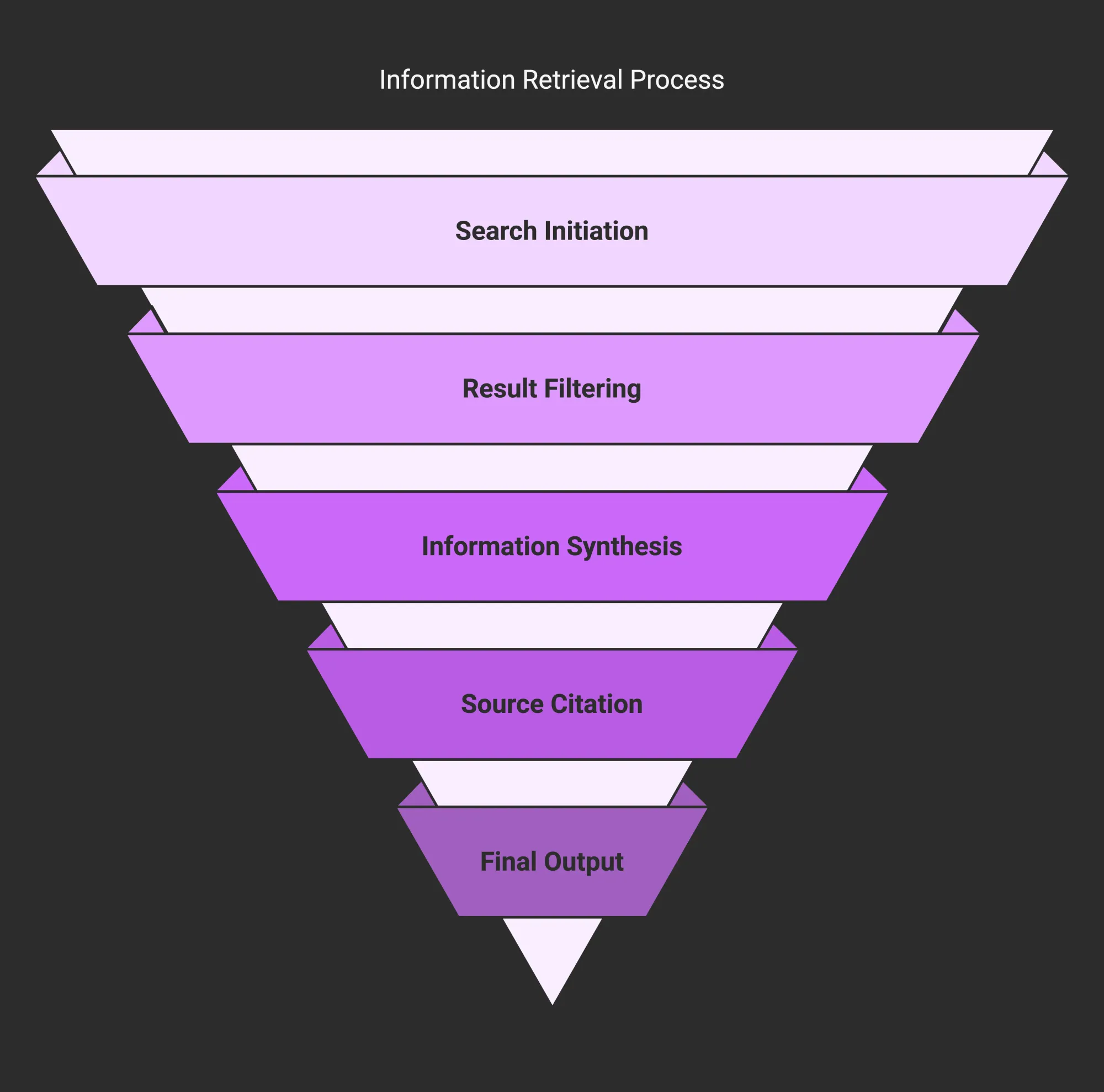

These agents can:

- Search across multiple sources simultaneously

- Filter and prioritize results based on relevance and credibility

- Synthesize information into coherent summaries

- Cite sources appropriately

- Provide both factual answers and nuanced analyses

Understanding AI agent web search capabilities is crucial for developing effective information retrieval agents. Access to current information beyond training data makes them valuable for research tasks requiring real-time knowledge.

Integrating Web Search Effectively

The web search tool in the Responses API transforms how information retrieval agents function. Rather than being limited to static training data, agents can now:

- Access real-time information from the internet

- Verify facts against multiple sources

- Provide citations for their findings

- Adapt to new developments and changing information

Effective implementation involves:

- Crafting precise search queries based on user intent

- Filtering and evaluating results for relevance and reliability

- Synthesizing data from multiple sources

- Presenting findings in a coherent, organized format

Advanced Information Processing Techniques

Beyond basic search and retrieval, advanced research agents can employ sophisticated techniques for processing information:

- Multi-step research: Breaking complex queries into sub-questions

- Cross-verification: Checking information across multiple sources

- Stance analysis: Identifying potential biases in sources

- Summarization at multiple levels: Creating both executive summaries and detailed analyses

- Custom knowledge integration: Combining web data with proprietary information

Implementation Guide

Here’s a practical example of implementing a research agent with the Responses API:

// Example of a research agent implementation

const response = await client.responses.create({

model: "gpt-4o",

tools: [{ type: "web_search" }],

input: `Research the latest developments in quantum computing,

focusing on practical applications that might emerge in the

next 3-5 years. Provide a balanced assessment of optimistic

and skeptical viewpoints.`

});

Customer Service & Support Agents

Customer service and support represent one of the most high-impact applications for AI agents built with the Responses API. These agents can handle inquiries, troubleshoot issues, and provide personalized assistance at scale.

Creating Responsive Support Agents

Effective customer service agents need to be responsive, accurate, and empathetic. With the Responses API, you can create agents that:

- Respond instantly to inquiries

- Handle common questions and issues without human intervention

- Access knowledge bases to provide accurate information

- Maintain context throughout conversations for a personalized experience

- Know when to escalate complex issues to human agents

This approach allows companies to provide 24/7 support while freeing human agents to focus on more complex interactions.

Memory Management for Personalization

One key aspect of effective support agents is their ability to remember context and personalize interactions. Enhancing conversational agents with effective memory management is crucial for creating context-aware support experiences.

The Responses API simplifies memory management by automatically maintaining conversation context. This can be further enhanced by implementing:

- Short-term memory: Remembering details within a current conversation

- Long-term memory: Recalling customer information and preferences across sessions

- Episodic memory: Referencing specific past interactions and resolutions

Understanding the differences between short-term and long-term memory in AI agents can help you design more effective customer service systems that maintain context appropriately.

Handling Complex Customer Queries

Complex customer queries often require multiple tools and capabilities. The Responses API excels here by allowing agents to:

- Search knowledge bases for product information and troubleshooting guides

- Access customer account data (with proper authentication)

- Reference past interactions and support tickets

- Look up current policies or promotions

- Generate personalized explanations and instructions

For example, a customer support agent might check a product’s return policy, look up a customer’s order status, and generate a personalized response explaining options—all within a single flow.

Content Generation & Creative Agents

Content generation represents another powerful application of AI agents built with the Responses API. These agents can create various types of content—from blog posts and marketing copy to technical documentation—while leveraging real-time information and tailored guidelines.

Designing Agents for Content Creation

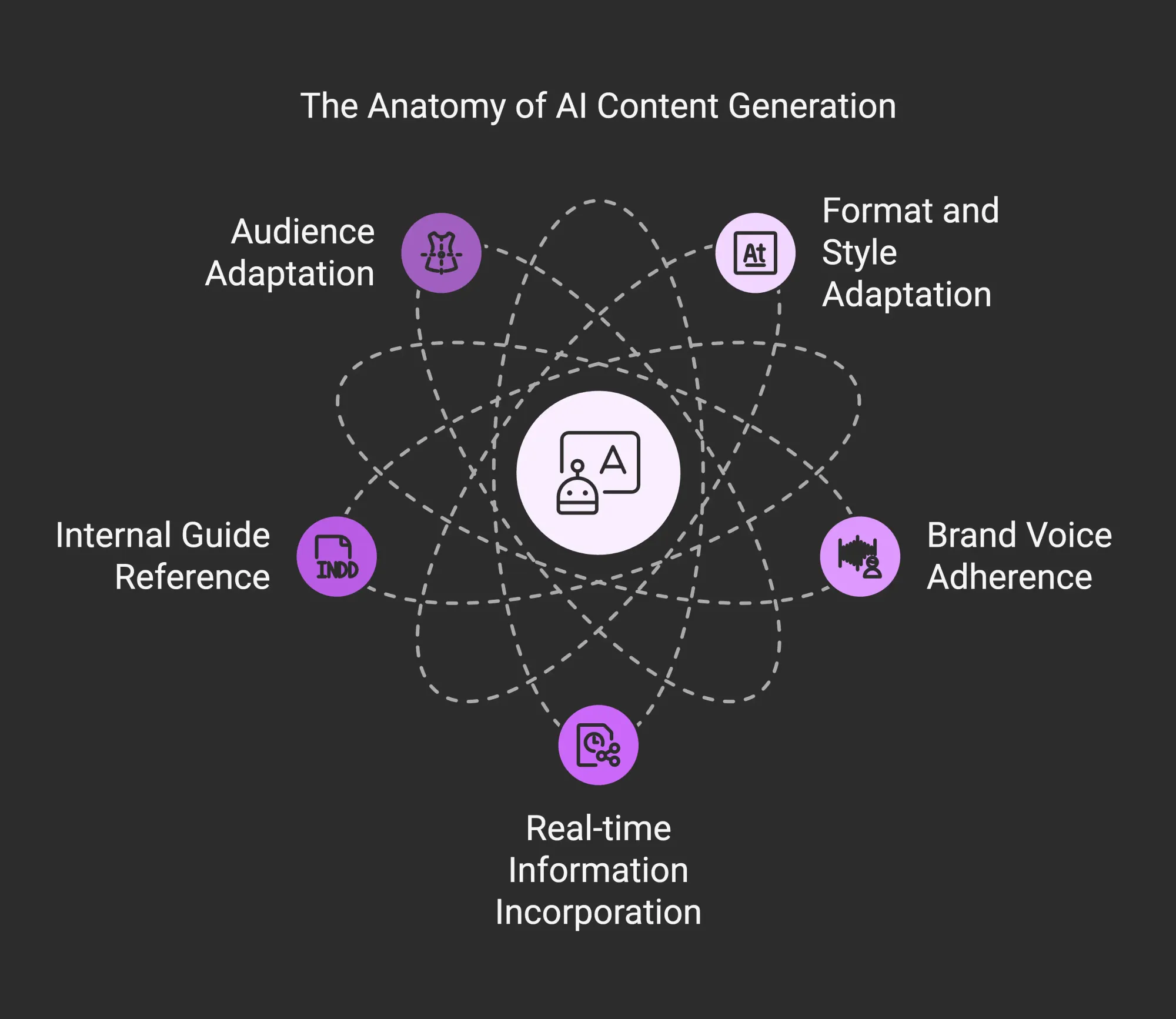

Effective content generation agents are designed to understand specific requirements and adapt their output accordingly. They can:

- Generate content in different formats and styles

- Adhere to brand voice and style guidelines

- Incorporate up-to-date information through web search

- Reference internal style guides and examples

- Adapt tone and complexity for different audiences

The Responses API’s ability to access both web data and internal documents makes it particularly powerful for content creation that requires factual accuracy and consistency.

Techniques for Guiding Creative Output

Creating high-quality content requires more than just generating text—it requires guiding the creative process effectively. Key techniques include:

- Detailed content briefs: Providing clear parameters for tone, style, length, and purpose

- Reference materials: Sharing examples of desired output

- Iterative refinement: Using feedback to improve generated content

- Fact verification: Ensuring accuracy through web search integration

- Strategic constraints: Setting boundaries that channel creativity effectively

These methods help ensure that the generated content meets objectives while maintaining quality and originality.

Integration with Content Systems

Content generation agents become even more valuable when integrated with existing content systems. The Responses API can work with:

- Content management systems

- Digital asset management platforms

- Marketing automation tools

- Social media scheduling platforms

- Email marketing systems

This allows for seamless workflows where content is generated, reviewed, and published without manual copying and pasting.

Real-World Examples

Content generation agents can be applied across numerous scenarios:

- Marketing teams: Creating blog posts, social media content, and email campaigns

- Product teams: Generating product descriptions and help documentation

- HR departments: Developing job descriptions, onboarding materials, and internal communications

- Educational organizations: Creating lesson plans, study guides, and assessment materials

Here’s a simplified implementation example:

// Example of a blog post generation agent

const response = await client.responses.create({

model: "gpt-4o",

tools: [

{ type: "web_search" },

{ type: "file_search" }

],

input: `Create a comprehensive blog post about sustainable packaging

innovations in the food industry. Include recent developments,

industry statistics, and practical examples. The post should be

informative but accessible to a general audience, approximately

1200-1500 words. Follow our brand voice guidelines (accessible

via file search) and verify all facts with web search.`

});

Expert & Advisory AI Agents

Expert system and advisory agents are some of the most sophisticated applications of the Responses API. These agents encapsulate domain-specific knowledge and decision-making capabilities to provide specialized advice in particular fields.

Building Domain-Specific Knowledge Agents

Expert systems emulate the decision-making abilities of human experts in specific domains. With the Responses API, you can create agents that:

- Incorporate specialized knowledge in fields like finance, healthcare, or engineering

- Apply domain-specific rules and frameworks to analyze situations

- Provide reasoned recommendations based on best practices

- Access up-to-date field-specific information through web search

- Reference internal knowledge bases and proprietary information

Domain-specific AI agents: Specialized solutions for industries are increasingly valuable as organizations recognize the potential of tailored AI systems that address unique challenges.

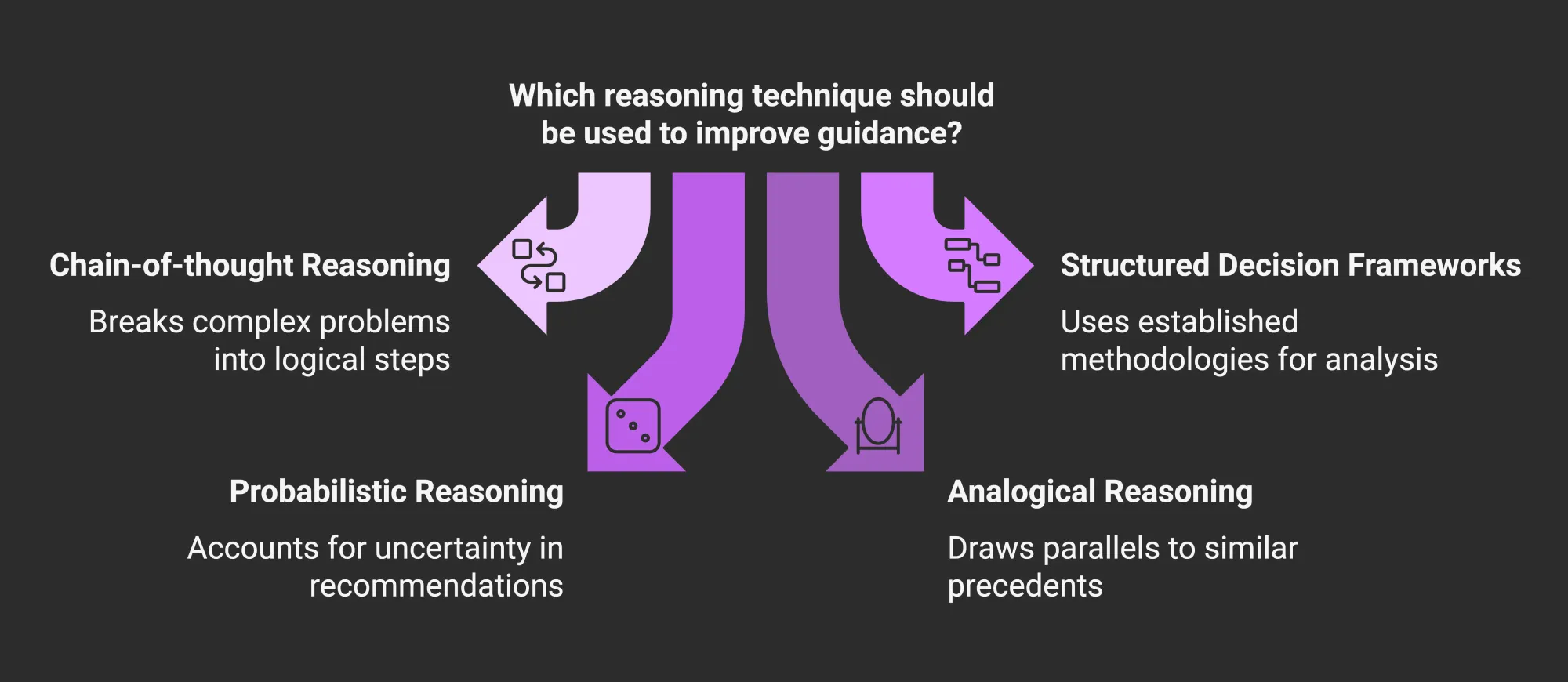

Techniques for Improving Reasoning Capabilities

Expert advisory agents require sophisticated reasoning to provide valuable guidance. Key techniques include:

- Chain-of-thought reasoning: Breaking complex problems into logical steps

- Structured decision frameworks: Using established methodologies for analyzing options

- Probabilistic reasoning: Accounting for uncertainty in recommendations

- Analogical reasoning: Drawing parallels to similar precedents

- Multi-perspective analysis: Considering problems from multiple viewpoints

Implementing these techniques helps ensure advisory agents provide thoughtful, nuanced guidance rather than simplistic answers.

Implementing Multi-Step Decision Processes

Many expert systems need to guide users through multi-step decisions. The Responses API facilitates this by:

- Maintaining conversation context throughout the decision journey

- Breaking complex decisions into manageable steps

- Requesting additional information when needed

- Adapting recommendations based on user input

- Explaining reasoning to build user confidence

This approach allows for more interactive and personalized advisory experiences.

Applications Across Industries

Expert system agents can be applied in various sectors:

- Finance: Investment advisors, tax planning assistants, financial literacy coaches

- Healthcare: Diagnostic assistants, treatment option analyzers, wellness coaches

- Legal: Contract analysis tools, compliance advisors, case research assistants

- Education: Subject matter tutors, study plan developers, learning style adapters

- Engineering: Design review assistants, troubleshooting guides, material selection advisors

Here’s a simplified example of an expert system for investment advice:

// Example of a financial advisory agent

const response = await client.responses.create({

model: "gpt-4o",

tools: [

{ type: "web_search" },

{ type: "file_search" }

],

input: `Based on my investment profile (risk tolerance: moderate,

investment horizon: 15 years, current portfolio: 60% stocks,

30% bonds, 10% cash), analyze current market conditions and

recommend potential adjustments to my asset allocation.

Consider recent economic indicators and provide reasoning.`

});

Multi-Agent System Architectures

As AI applications grow more sophisticated, multi-agent architectures become increasingly important. These systems leverage multiple specialized agents working together to handle complex tasks that a single agent might struggle to manage effectively.

Coordinating Multiple Specialized Agents

Multi-agent systems require careful coordination to function effectively. Key approaches include:

- Orchestration layers: Central systems that direct work to specialized agents

- Role-based specialization: Defining clear responsibilities for each agent

- Shared context: Ensuring all agents have access to necessary information

- Priority and conflict resolution: Establishing rules for handling competing priorities

The open-source Agents SDK provided alongside the Responses API offers valuable tools for implementing these coordination mechanisms.

Implementing Agent-to-Agent Handoffs

Smooth handoffs between agents are crucial for maintaining conversation continuity and ensuring tasks are completed effectively. Effective handoffs require:

- Clear transfer protocols that include relevant context

- Consistent state management across agents

- Transparent transitions from the user’s perspective

- Fallback mechanisms when handoffs fail

For example, a customer service system might include separate agents for initial triage, technical support, billing inquiries, and escalation—each picking up where the previous agent left off.

Managing Context Across Agent Interactions

Context management is even more critical in multi-agent systems. Strategies include:

- Centralized context repositories: Shared knowledge bases accessible to all agents

- Context summarization: Condensing relevant information when transferring between agents

- Selective information sharing: Passing only pertinent details to specialized agents

- Context versioning: Tracking how understanding evolves across agent interactions

These approaches ensure that users experience a cohesive interaction despite multiple specialized agents working behind the scenes.

Code Examples for Multi-Agent Orchestration

Here’s a simplified example of how you might implement a multi-agent system using the Responses API and Agents SDK:

// Example of a multi-agent system with handoffs

// First agent: Initial triage

const triageResponse = await client.responses.create({

model: "gpt-4o",

tools: [{ type: "web_search" }],

input: userQuery,

additional_instructions: "Determine if this is a technical issue, billing question, or general inquiry."

});

// Based on triage, route to appropriate specialized agent

if (triageResponse.content.includes("technical issue")) {

const techSupportResponse = await client.responses.create({

model: "gpt-4o",

tools: [

{ type: "web_search" },

{ type: "file_search" }

],

input: `${triageResponse.content}\n\nOriginal user query: ${userQuery}`,

additional_instructions: "You are a technical support specialist. Provide detailed troubleshooting guidance."

});

// Return tech support response

} else if (triageResponse.content.includes("billing")) {

// Route to billing agent with context

// ...

}

This example demonstrates a basic handoff between a triage agent and specialized support agents, maintaining context throughout the process.

Technical Implementation Deep Dive

Building effective AI agents requires thoughtful technical implementation. This section explores key aspects of agent development, including memory management, tool integration, error handling, and security considerations.

Memory Management Strategies

Effective memory management is critical for maintaining context and enabling personalized interactions. Key strategies include:

- Short-term memory: Maintaining immediate conversation context within the current session

- Long-term memory: Storing and retrieving information about users and past interactions

- Semantic memory: Organizing knowledge in a structured, queryable format

- Episodic memory: Recording specific interactions and events chronologically

These memory types often work together. For instance, a customer service agent might use short-term memory for the current conversation while accessing long-term memory to personalize responses based on customer history.

Tool Integration Patterns and Best Practices

The Responses API simplifies tool integration, but effective implementation still requires careful consideration. Best practices include:

- Clear tool definitions: Precisely defining what each tool does and when it should be used

- Progressive disclosure: Starting with simple tool configurations and adding complexity gradually

- Tool combination strategies: Understanding how different tools complement each other

- Parameter optimization: Tuning tool parameters for optimal performance

For example, effectively combining web and file search requires understanding when to use each—web for current public information and file search for proprietary data.

Error Handling and Fallback Mechanisms

Robust error handling is essential for production-grade AI agents. Key strategies include:

- Graceful degradation: Maintaining functionality when a component fails

- Retry mechanisms: Automatically attempting failed operations with backoff

- Alternative paths: Providing multiple ways to accomplish tasks when preferred methods fail

- User-friendly error messages: Communicating issues clearly

- Human escalation: Knowing when to involve human operators

These approaches ensure that AI agents remain useful and trustworthy even when facing unexpected situations.

Security Considerations and Compliance Requirements

Securing AI agents with proper authentication and maintaining compliance with relevant regulations are critical aspects of implementation. Key considerations include:

- Authentication and authorization: Ensuring only authorized users can access agent capabilities

- Data privacy: Protecting sensitive information shared during interactions

- Audit trails: Maintaining records of agent actions for compliance and debugging

- Access controls: Limiting which systems agents can access

- Compliance with regulations: Adhering to legal requirements like GDPR, HIPAA, or industry-specific rules

Security must be a core consideration from the start. Identifying potential vulnerabilities early can help mitigate risks before they become problems.

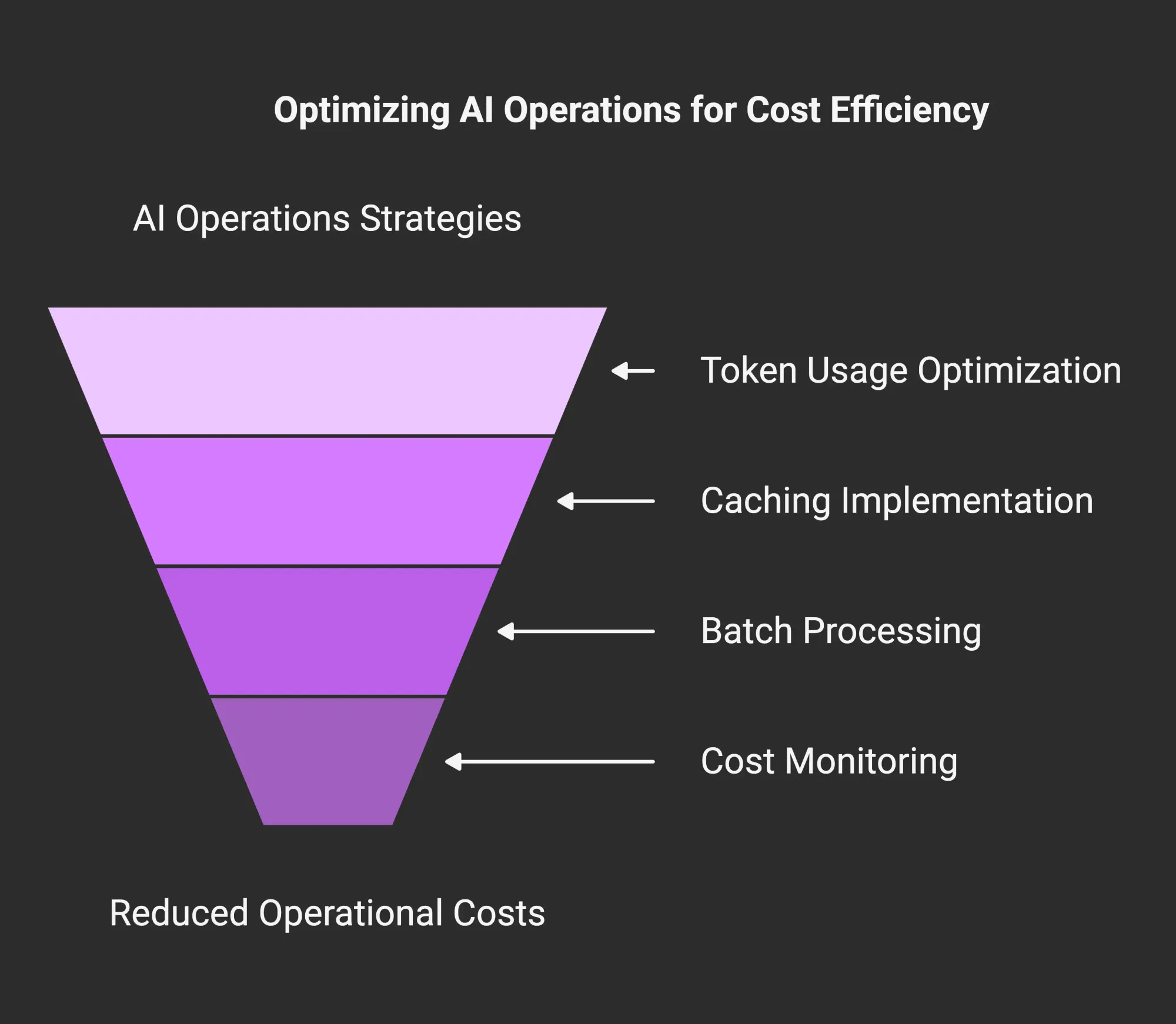

Performance Optimization & Cost Management

As AI agent usage scales, performance optimization and cost management become increasingly important. Effective strategies can reduce operational expenses while maintaining or improving response quality and speed.

Token Usage Optimization Techniques

API costs are often based on token usage, so optimizing token usage can directly affect expenses. Token optimization for effective prompt engineering involves several key techniques:

- Concise prompts: Crafting clear but brief instructions

- Strategic information placement: Putting vital information at the beginning of prompts

- Controlled verbosity: Limiting unnecessary explanation

- Response length parameters: Setting appropriate maximum lengths

- Output format optimization: Using formats that convey information efficiently

These techniques can reduce token consumption without sacrificing output quality.

Implementing Effective Caching Strategies

OpenAI cost optimization best practices often highlight caching as critical. Effective caching approaches include:

- Result caching: Storing responses for common queries

- Semantic caching: Recognizing and retrieving answers for semantically similar queries

- Partial result caching: Storing intermediate results in multi-step processes

- Cache invalidation: Determining when cached data becomes outdated

Implementing a robust caching layer can reduce API calls substantially for applications with repetitive queries or predictable usage patterns.

Batch Processing for Efficiency

For scenarios that don’t require immediate responses, batch processing can significantly improve efficiency and reduce costs. Strategies include:

- Request batching: Combining multiple user requests into fewer API calls

- Asynchronous processing: Handling non-urgent tasks during off-peak periods

- Workload optimization: Structuring tasks to minimize context switching

- Prioritization: Handling time-sensitive requests first

These approaches can improve resource utilization while lowering operational costs.

Monitoring and Managing Operational Costs

Controlling costs when using OpenAI API requires ongoing monitoring and management. Key strategies include:

- Usage analytics: Tracking API consumption patterns and identifying optimization opportunities

- Budget alerts: Setting up notifications for usage thresholds

- Rate limiting: Preventing unintended overuse during spikes

- Model selection: Choosing the right model for each task

- Regular optimization reviews: Periodically refining your implementation

These practices enable sustainable scaling of AI agent deployments while keeping costs predictable.

Security & Ethics Best Practices

As AI agents become more capable and integrated into critical systems, security and ethical considerations become increasingly important. Robust safeguards and ethical frameworks are essential for responsible AI deployment.

Protecting Against Prompt Injection and Other Vulnerabilities

AI agents face various security threats, with prompt injection being particularly concerning. Key protection strategies include:

- Input validation: Filtering and sanitizing user inputs

- Prompt engineering safeguards: Designing prompts resistant to manipulation

- Rate limiting: Preventing abuse through excessive requests

- Monitoring for anomalous behavior: Detecting unusual patterns

- Regular security testing: Conducting adversarial checks to identify vulnerabilities

These measures help protect against adversaries attempting to manipulate AI agents.

Data Privacy Considerations

AI agents often process sensitive information, making data privacy a critical concern. Best practices include:

- Data minimization: Collecting and processing only necessary information

- Encryption: Protecting data in transit and at rest

- Anonymization: Removing personally identifiable information when possible

- Transparent privacy policies: Clearly communicating data handling practices

- Retention policies: Defining how long information is stored

These approaches protect user privacy while enabling contextually relevant experiences.

Implementing Proper Authentication and Authorization

Securing AI agents with proper authentication is crucial, especially for agents with access to sensitive data or capabilities. Key strategies include:

- Multi-factor authentication: Requiring multiple verification methods

- Role-based access control: Limiting capabilities based on user roles

- Token-based authentication: Using secure, temporary tokens for API access

- Fine-grained permissions: Applying the principle of least privilege

- Regular access reviews: Periodically validating permissions

These measures help ensure AI agents are accessible only to authorized users and perform only authorized actions.

Ethical Deployment Guidelines by Industry

Different industries have specific ethical considerations for AI deployment:

- Healthcare: Prioritizing patient privacy, maintaining human oversight for critical decisions

- Finance: Preventing algorithmic bias, ensuring explainability, maintaining regulatory compliance

- Education: Protecting student privacy, avoiding reinforcement of existing inequalities

- Legal: Ensuring fairness, confidentiality, supporting due process

- Customer Service: Transparency about AI use, maintaining service quality, providing human escalation paths

These guidelines help ensure that AI agents are deployed responsibly and address the unique challenges of each sector.

Testing & Evaluation Strategies

Comprehensive testing and evaluation are essential for developing reliable, effective AI agents. A systematic approach helps identify issues, optimize performance, and ensure agents meet user needs.

Methods for Evaluating Agent Performance

Effective evaluation assesses multiple dimensions of performance:

- Task success rate: How often the agent completes assigned tasks

- Response quality: Accuracy, relevance, and usefulness of content

- Reasoning capability: Logical consistency and soundness

- Tool usage efficiency: Appropriate selection and effective use of available tools

- Robustness: Performance under unexpected or edge-case inputs

These dimensions should be evaluated through automated metrics and human assessment for a complete view.

Automated Testing Approaches

Automated testing enables consistent, scalable evaluation. Key approaches include:

- Regression testing: Ensuring new versions maintain or improve upon previous performance

- Benchmark testing: Comparing against standard datasets

- Adversarial testing: Evaluating resilience against challenging inputs

- Stress testing: Assessing performance under high load

- Simulation testing: Evaluating in controlled environments before real-world deployment

Automated testing can identify issues across a broader range of scenarios than manual testing alone.

User Feedback Integration

User feedback provides crucial insights into real-world performance. Effective integration strategies include:

- Feedback collection mechanisms: Post-interaction surveys, user interviews

- Feedback categorization: Classifying issues to identify patterns

- Feedback loops: Systematically incorporating insights into development

- A/B testing: Comparing alternative approaches based on user reception

- Long-term satisfaction tracking: Monitoring changes in user satisfaction over time

User feedback helps ensure that technical performance translates into actual user value.

Continuous Improvement Techniques

AI agent development is an iterative process that requires ongoing refinement. Key approaches include:

- Performance monitoring: Tracking key metrics in production

- Error analysis: Investigating patterns in failures to identify root causes

- Prompt refinement: Iteratively improving prompts based on performance data

- Regular retraining: Updating models with new data

- Competitive benchmarking: Comparing against alternative solutions to identify gaps

These techniques help ensure AI agents continue to improve over time, adapting to changing requirements.

Common Challenges & Solutions

Building AI agents with the Responses API involves navigating various challenges. Understanding these issues and their solutions can help you create more robust and effective agents.

Handling Context Limitations

Despite advances in model capabilities, context limitations remain a challenge for complex interactions. Common solutions include:

- Context summarization: Condensing previous interactions to essential info

- Strategic context pruning: Removing less relevant data when approaching token limits

- Hierarchical memory systems: Storing information with varying retention periods

- Information prioritization: Ensuring the most critical context remains accessible

Understanding tokens, cost, and speed with OpenAI’s APIs is crucial for managing context effectively while balancing performance considerations.

Managing Tool Transitions Effectively

Smooth transitions between different tools are essential for complex tasks. Key strategies include:

- Clear tool selection criteria: Defining when to use each available tool

- Context preservation during transitions: Retaining relevant data when switching tools

- Graceful fallbacks: Handling situations where a tool doesn’t provide expected results

- Progress tracking: Monitoring multi-step processes involving multiple tools

These approaches ensure tool transitions appear seamless to users while maintaining effectiveness.

Addressing Security Concerns

As AI agents gain more capabilities, security concerns become increasingly important. Effective measures include:

- Comprehensive threat modeling: Identifying potential vulnerabilities

- Least privilege principles: Limiting agent capabilities to what’s necessary

- Regular security audits: Proactively testing for weaknesses

- Sandboxing: Isolating agent operations to prevent broader system access

Threat modeling for Computer-Using Agents can help mitigate security risks before they become significant problems.

Troubleshooting Integration Issues

Integration challenges are common when connecting AI agents to existing systems. Common solutions include:

- API versioning strategies: Managing changes in API specs

- Comprehensive logging: Recording details about interactions for debugging

- Testing environments: Isolated environments for validating integrations

- Graceful degradation: Maintaining functionality when integrations fail

- Circuit breakers: Preventing cascading failures when dependencies are unavailable

These steps help ensure reliable integration and consistent agent performance.

Future Directions & Emerging Patterns

The field of AI agents is evolving rapidly, with new capabilities and methods emerging. Understanding these future directions can help you make strategic decisions about AI agent implementations.

Upcoming Developments in the Responses API

Although the specific roadmap may not be public, several trends suggest possible developments:

- Expanded tool capabilities: Additional built-in tools beyond current offerings

- Enhanced memory mechanisms: More sophisticated approaches to context and personalization

- Improved multimodal capabilities: Better handling of images, audio, and video

- Fine-tuning options: More customization for specialized implementations

- Advanced orchestration: More refined tools for managing multi-agent systems

These advancements will likely expand what's possible with AI agents while making them more accessible and flexible.

Emerging Agent Design Patterns

Several design patterns are proving effective:

- Specialist/generalist architecture: Combining broad generalist agents with deep domain specialists

- Progressive disclosure: Starting with basic capabilities and revealing more advanced features as needed

- Feedback-driven refinement: Using user feedback to improve responses continuously

- Context-aware tool selection: Dynamically choosing tools based on the specific task

- Safety-first design: Building safeguards and ethical considerations early

These patterns are likely to become standard in agent design as the technology matures.

Industry Trends and Opportunities

Several trends are shaping the future of AI agents:

- Increasing autonomy: Agents capable of more independent decision-making

- Domain specialization: Agents custom-built for specific industries and use cases

- Integration with robotics and IoT: AI agents controlling physical systems

- Collaborative agent ecosystems: Multiple agents working together in complementary roles

- Personalization at scale: Agents adapting to individual user preferences

Organizations that effectively leverage these trends can create innovative products, services, and experiences.

Preparing for Future Capabilities

To position your projects for success as AI agent capabilities evolve, consider:

- Building modular architectures: Designing systems that can easily incorporate new capabilities

- Investing in data quality: Creating clean, well-structured datasets for AI consumption

- Developing AI governance frameworks: Establishing guidelines for responsible AI use

- Creating feedback mechanisms: Capturing and incorporating user feedback continuously

- Fostering multidisciplinary teams: Combining technical expertise with domain knowledge and ethical perspectives

These preparations can help you capitalize on new capabilities while managing associated risks and challenges.

Conclusion & Key Insights

The OpenAI Responses API represents a significant advancement in AI agent development, making sophisticated capabilities accessible to a broader range of developers while expanding what's possible with AI systems. You can build various types of AI agents capable of automating tasks, retrieving and synthesizing information, supporting customers, generating content, and providing expert advice.

Key Takeaways for Implementation

As you begin building AI agents with the Responses API, remember these principles:

- Start with clear objectives: Define specific problems to solve and metrics for success

- Choose appropriate agent types: Match the agent architecture to your needs

- Implement robust security measures: Protect against vulnerabilities from the outset

- Optimize for performance and cost: Balance capabilities with efficiency

- Plan for continuous improvement: Gather feedback and refine your agent over time

These strategies will help you develop practical AI agents that deliver real value.

Getting Started with Your First Agent

To build your first agent, consider this step-by-step approach:

- Select a simple, well-defined use case where AI can provide clear value.

- Determine which built-in tools (web search, file search, computer use) your agent needs.

- Design your initial prompts and test them with different inputs.

- Implement basic error handling and fallback mechanisms.

- Gather feedback from test users and refine your implementation.

- Monitor performance and gradually expand capabilities.

This incremental process allows you to gain experience and confidence while delivering tangible results.

Resources for Continued Learning

To deepen your understanding and stay updated on best practices, consider exploring:

- OpenAI's official documentation on agent tools

- Industry case studies showcasing successful implementations

- Open-source projects building on the Agents SDK

- AI security resources and best practices

These resources can provide ongoing guidance as you expand your AI agent implementations.

By building AI agents that are helpful, secure, and aligned with user needs, you can contribute to a future where AI technology serves humanity’s best interests. The journey is just beginning, and the opportunities are immense.